In today’s hyperconnected world, the volume of data being generated is staggering. From mobile devices, vehicles, industrial sensors, and smart cameras to wearables and IoT-enabled appliances, billions of endpoints produce continuous streams of information. Traditionally, this data would be sent to centralized cloud servers for processing. While cloud computing remains a cornerstone of digital infrastructure, latency, bandwidth constraints, and privacy concerns are driving a major shift: processing data closer to where it is generated. This is where Edge AI comes into play. It blends the benefits of artificial intelligence with the efficiency of edge computing to enable real-time decision-making at scale.

Edge AI refers to the deployment of AI models and algorithms directly onto edge devices, such as sensors, gateways, smartphones, robots, and embedded devices. Instead of relying solely on cloud-based servers for processing, edge devices analyze data locally and make decisions with minimal delay.

By integrating AI into edge hardware, systems can collect, process, and act on insights instantaneously, with faster responses, reduced dependency on network connectivity, and enhanced efficiency and privacy. In applications ranging from autonomous vehicles to factory automation, Edge AI representsa paradigm shift in how intelligence is delivered.

Why the Edge Matters in Real-Time Decision Making

Modern AI applications are no longer just about big data analytics. Many of them require split-second decision-making. Let’s consider a few examples:

Autonomous Driving: A self-driving car encountering a sudden road obstacle must react immediately. Sending sensor data to the cloud, waiting for analysis, and receiving responses could cause fatal delays. Edge AI allows on-the-spot processing, which leads to safe navigation in dynamic environments.

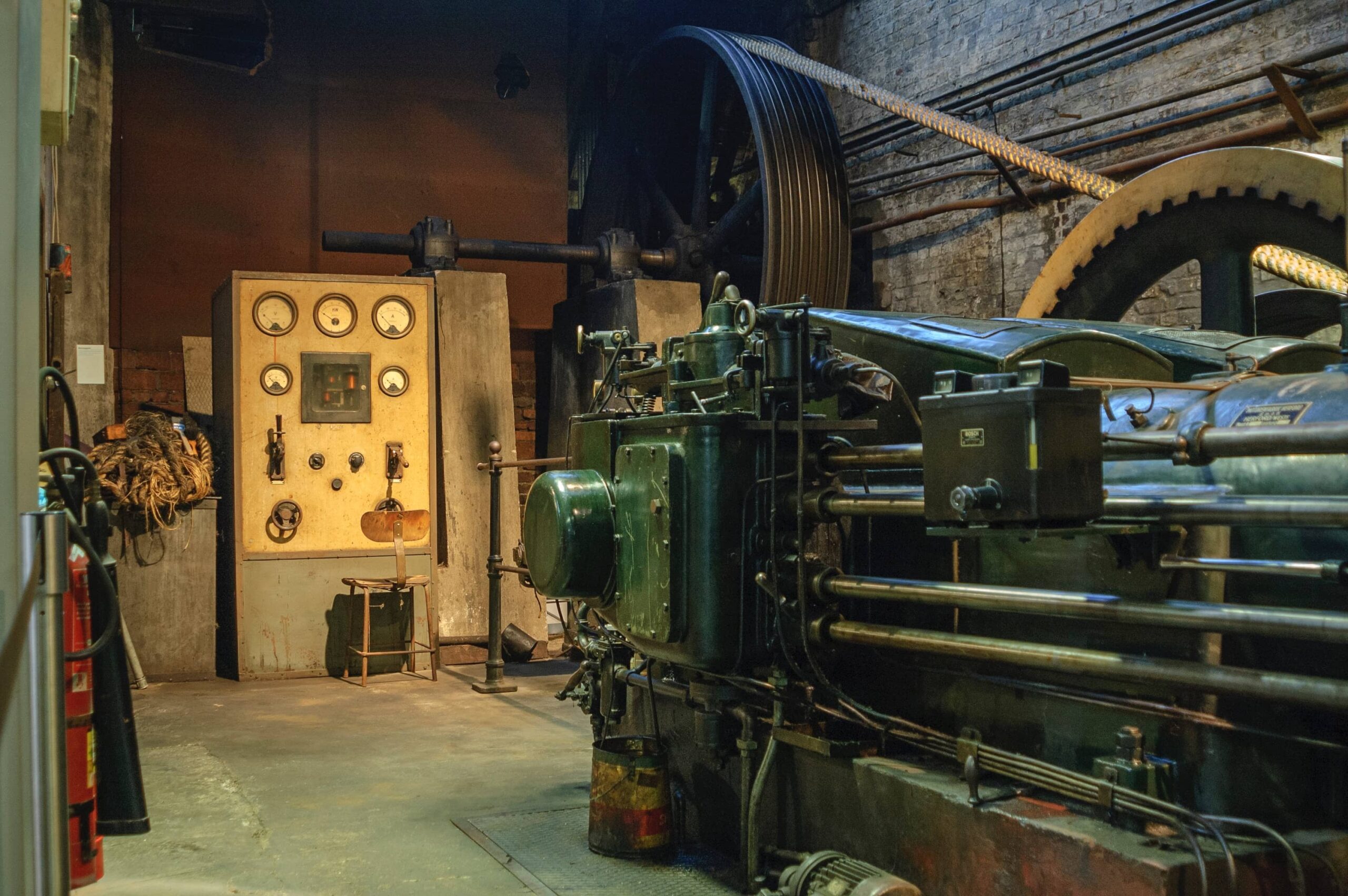

Industrial Automation: In manufacturing, machines equipped with vibration sensors can detect anomalies and shut down instantly to prevent damage, reduce downtime, save costs, and protect workers.

Healthcare Diagnostics: Wearable devices monitoring vital signs can instantly alert medical personnel when irregularities are detected, without relying on continuous cloud communication.

In each case, latency is critical, and cloud-only infrastructure can’t guarantee the rapid responses these situations demand. Edge AI solves this by keeping the “thinking” as close as possible to the “doing”.

The Scalability of Edge AI

One might assume that processing at the edge limits scalability because each device must have computational capacity. However, modern advancements in hardware and software are making scalable Edge AI a reality:

Lightweight AI Models: Techniques like model pruning, quantization, and knowledge distillation streamline complex AI models, so they can run efficiently on resource-constrained devices.

Specialized Hardware: AI-accelerating chips (such as NVIDIA Jetson modules, Google’s Edge TPU, or Intel Movidius accelerators) are designed specifically for edge-based inference tasks.

Federated Learning: This distributed AI approach trains models across multiple devices without sharing raw data with a central server. Each device contributes to model improvements while maintaining data privacy.

The advancements mentioned above mean organizations can deploy millions of intelligent edge nodes across geographies that work in synergy, learning locally, adapting quickly, and operating with minimal external dependencies.

Benefits of Edge AI at Scale

1. Ultra-Low Latency

By processing data locally, Edge AI minimizes latency from seconds to milliseconds. Such responsiveness is essential for time-sensitive operations in autonomous systems, critical safety applications, and real-time analytics.

2. Reduced Bandwidth Consumption

Sending huge volumes of raw data to the cloud consumes a massive amount of bandwidth and incurs storage costs. With Edge AI, devices transmit only refined results or exceptions rather than entire data sets, optimizing network efficiency.

3. Increased Reliability

Edge AI devices can function even during intermittent network connectivity or outages.

4. Enhanced Privacy and Security

Since sensitive data is processed locally, there’s less exposure through transmission, reducing vulnerabilities and helping organizations comply with regulations such as GDPR or HIPAA.

5. Cost Efficiency

By reducing reliance on central processing and bandwidth-heavy data transfer, Edge AI lowers infrastructure expenses, particularly in distributed environments.

Looking Forward: The Scale Challenge, Convergence of AI, 5G, and IoT

Edge AI applications span various industries. However, the successful implementation of Edge AI also requires addressing challenges related to hardware limitations, model management, security, interoperability, and cost. As organizations deploy Edge AI at scale, new challenges emerge. Managing thousands or millions of edge devices requires sophisticated orchestration tools. Updating AI models across distributed deployments demands robust DevOps practices. Ensuring security becomes more complex when computational power resides at network edges rather than in protected data centers.

The rise of 5G networks is set to amplify Edge AI’s capabilities. 5G offers ultra-fast, low-latency connectivity between edge nodes and central systems, enabling complex hybrid processing models where edge devices handle immediate decision-making and offload deeper analysis to the cloud when necessary.

Coupled with the expansion of IoT, the combination of Edge AI and 5G will enable billions of devices to act autonomously yet collaboratively. AI-driven orchestration systems will manage massive edge networks, automatically reallocating workloads and updating models in real-time.

We’re also seeing AutoML for edge environments, enabling devices to retrain or fine-tune models based on changing conditions without human intervention. This self-optimizing capability will be crucial for scaling edge networks across diverse applications.

Conclusion

Edge AI is the backbone of the next generation of intelligent, autonomous systems. By processing information locally, organizations can make split-second decisions, operate at massive scale, and protect data privacy, all while optimizing network and infrastructure costs.